We’re happy to announce the February 2025 update to Profitbase Flow, introducing many new features including additions to SQL Server, Microsoft Fabric, Power BI writeback visuals support, Azure Files and many more.

Microsoft Fabric

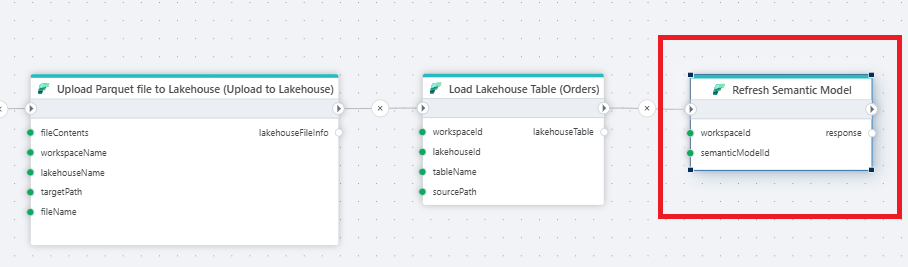

Refresh semantic model

You can now refresh of a semantic model in Microsoft Fabric directly from Flow instead of using a Fabric Data Pipeline. You can configure the operation be “fire-and-forget”, or wait for the refresh to complete before the next action in the Flow is executed.

Read more here.

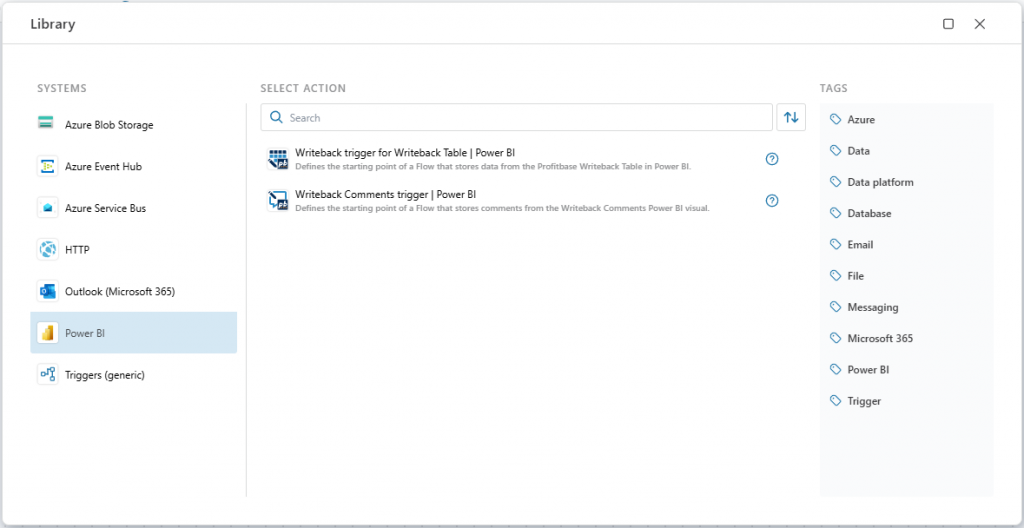

Power BI triggers

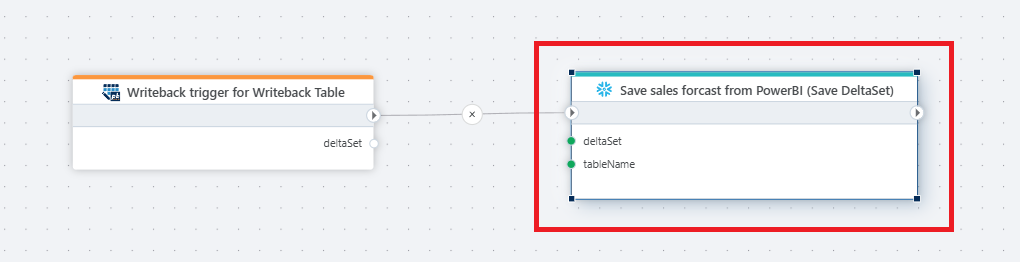

Writeback trigger for Writeback table

The Writeback Table visual for Power BI enables editing data in Power BI. Use this trigger to define the starting point of a Flow that saves the changes to the preferred database or service, for example Azure SQL database or Snowflake.

Writeback comments trigger

The Writeback Comments visual for Power BI enables users to add comments to reports, report lines, KPIs or data points, and save the comments to a database or service. Use this trigger to define the starting point of a Flow that saves the comments to the preferred database or service, for example Azure SQL database or Snowflake.

SQL Server

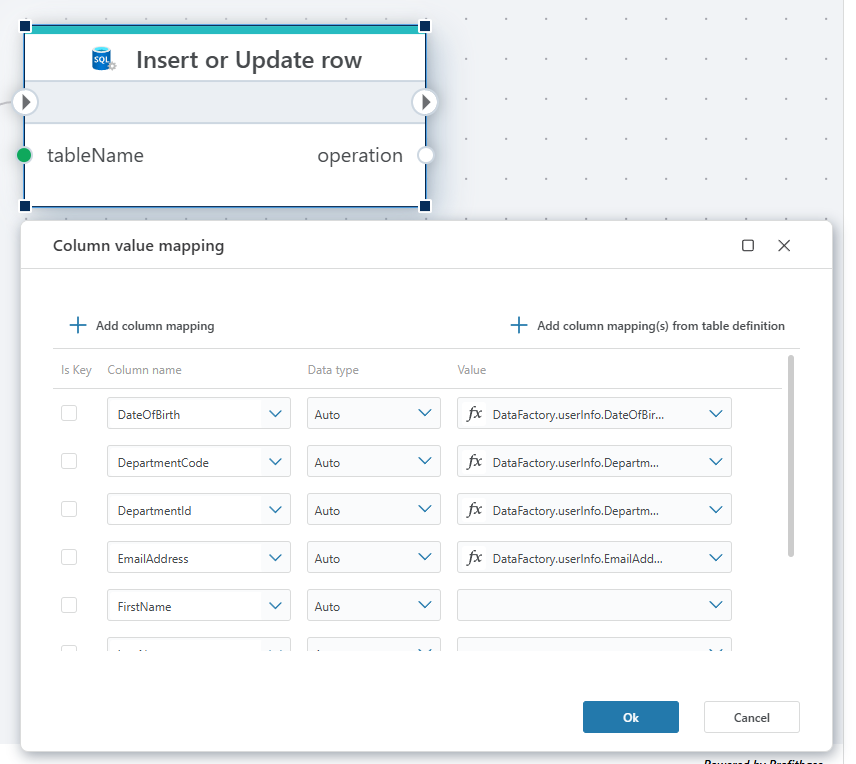

Insert or Update row

Although you can insert or update a row in a SQL Server database using the Execute command action, it requires writing SQL code. The new Insert or Update row action makes it easy to perform this task without writing a single line of code, by simply defining which row to update specifying columns and associated values.

Read more here.

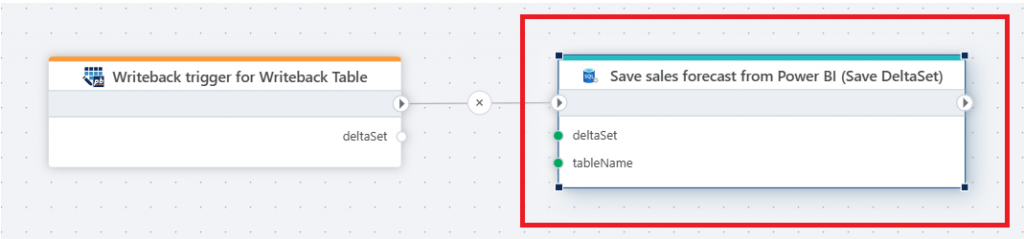

Save DeltaSet

When users edit data in the Writeback Table for Power BI and saves the changes, the changes are represented as a Flow DeltaSet.

This action will save all changes in the DeltaSet by either inserting, updating, or deleting rows in a SQL Server or Azure SQL table.

Read more here.

Snowflake

Save DeltaSet

When users edit data in the Writeback Table for Power BI and saves the changes, the changes are represented as a Flow DeltaSet.

This action will save all changes in the DeltaSet by either inserting, updating, or deleting rows in a Snowflake table.

Read more here.

Parquet

Support for dynamic column mappings

You can now provide dynamic column mappings to Parquet actions. This is useful in scenarios when you do not know the schema of a Parquet file design time, AND you don’t want to read all the data (all columns) from the file.

Optional column mapping

You no longer need to provide a column mapping when using the Parquet actions that reads files, such as the Open Parquet file as DataReader action. You only need to provide the column mapping if you want to rename the columns during the read operation, or if you want to ignore some columns from the file.

CSV

Optional column mapping

You no longer need to provide a column mapping when using the CSV actions that reads files, such as the Open CSV file as DataReader action. Note that if you don’t specify a column mapping, the first line in the CSV file must be a header row (containing the column names), and all fields will be read as text.

Profitbase InVision

The SQL and PowerShell script actions now supports parameterization of which scripts to execute, in addition to selecting specific scripts. This enables creating generic Flows that can execute any SQL or PowerShell script in InVision.

Azure Files

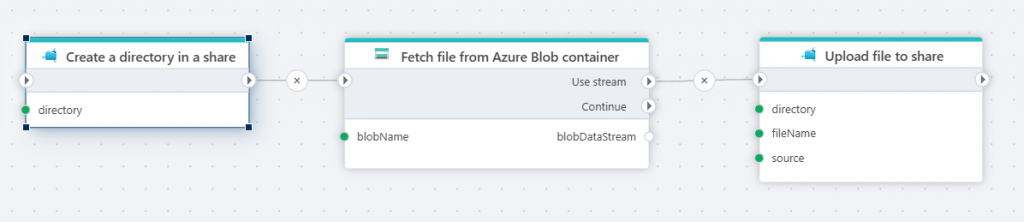

Create directory in a share

This action creates a directory in an Azure Files share if the directory does not already exist. This enables dynamically creating directories in an Azure Files share when executing a Flow.

Read more here.

Delete directory in a share

This action deletes a directory if it exists. This enabled dynamically deleting directories in an Azure Files share when executing a Flow.

Read file from share as byte array

This action enables reading a file as a byte array instead of as a stream. Because a stream can only be read once, reading the file as a byte array is useful when you need to process the same file multiple times in the Flow. If you only need to process the file once, prefer using the stream option. If you need to process the file multiple times, prefer the byte array option.

Read more here

OneDrive

Read file from OneDrive as byte array

This action enables reading a file as a byte array instead of as a stream. Because a stream can only be read once, reading the file as a byte array is useful when you need to process the same file multiple times in the Flow. If you only need to process the file once, prefer using the stream option. If you need to process the file multiple times, prefer the byte array option.

Read more here.

JSON

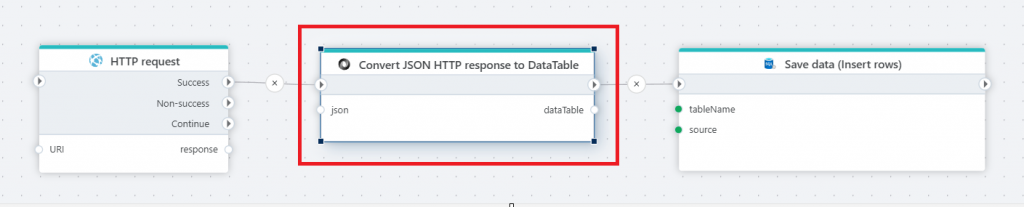

Convert JSON to DataTable

We’ve added support for converting a JSON string to a .NET DataTable, which makes it easy to work with JSON (for example from web APIs) that can be represented in a table format. With the DataTable, you can apply a series of data transformations using for example the DataTableTranformer API in Flow.

Dynamic connections

Dynamically creating connections to systems (like ERP or databases) enables storing credentials and connection strings outside of Flow. It also enables actions to dynamically connect to different endpoints during the execution of a Flow, for example in a loop where you might want to connect to different databases based on a variable.

The systems currently supporting dynamic connections are:

- Azure Blob containers

- SQL Server

- Visma.Net

- Visma Business NXT

- Xledger

- Dynamics 365 Business Central

- TripleTex

- PowerOffice GO

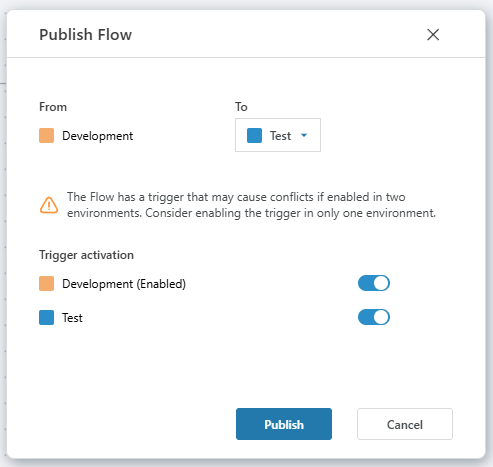

Option to disable trigger when publishing a Flow

If a Flow has a trigger, you now get an option to disable the trigger in one of the environments when publishing the Flow. This only applies to triggers that reacts to events in external systems, or triggers that run on a schedule. Endpoint triggers, such as the HTTP trigger, is not affected by this behavior.

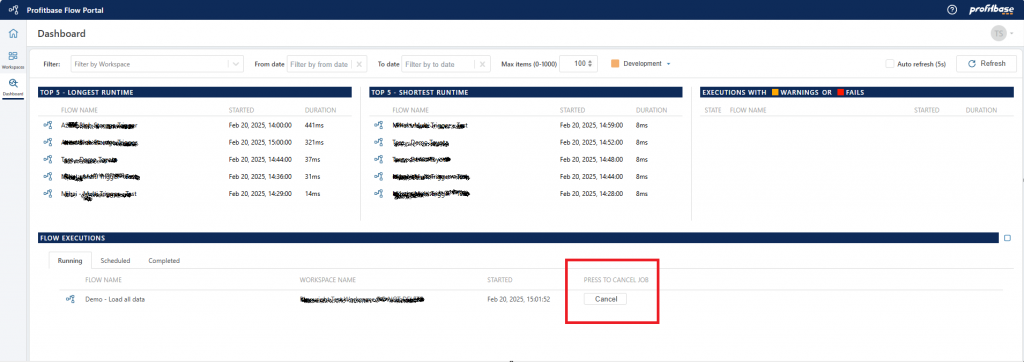

Cancellation of jobs from the dashboard

You can now cancel running jobs from the dashboard. When cancelling a job, a cancellation request is sent and the job will stop at first chance. Depending on what task the job is currently performing, the job may stop immediately, or it might take several seconds or minutes to stop.

That’s all for now!

Happy automation!